Introduction

FastAPI has been gaining momentum as the go-to Python backend framework. The Python Developers Survey 2022 Results indicated that a solid 25% of Python developers used FastAPI last year. What’s drawing developers in is its simplicity and streamlined approach to solving typical API development challenges, such as handling data validation, serialization, and documentation with minimal code overhead. FastAPI essentially takes care of these hurdles right out of the box!

FastAPI works seamlessly with Starlette, which serves as a lightweight ASGI framework and toolkit, enabling the smooth development of asynchronous web services in Python. This makes integrating concurrent libraries a walk in the park. Just sprinkle in the async and await keywords in the right places (don’t worry, we’ll delve into this soon), and FastAPI handles the rest, managing the complexity of concurrency under the hood.

In the upcoming sections, we’ll explore the concept of concurrency and how FastAPI leverages it. Plus, we’ll get hands-on with a simple project to uncover a common pitfall that emerges from misusing the async and await keywords alongside FastAPI.

About concurrency in Python

Before talking about concurrency in Python, let’s describe concurrency. The dictionary describes concurrency or concurrent programming as the ability to execute more than one program or task simultaneously.

A familiar example of concurrency is when you’re juggling multiple chat conversations in your chat of preference. Imagine you’re chatting with your best friend, and your crush simultaneously. Each time you reply to a message from your best friend and change the chat to answer your crush, you essentially pause one task (the chat with your best friend) and switch over to another task (your chat with your crush). Then, when your best friend responds, you wrap up your message to your crush, go back to the chat with your best friend (which retains its chat history or state), and repeat this process for each conversation.

Keep in mind that performing the same tasks simultaneously, in parallel, also falls under the realm of concurrency. In the context of computing, this implies that concurrency can be achieved with both single-core and multi-core processors. With a single-core processor, you can employ pre-emptive multitasking or cooperative multitasking. On the other hand, when dealing with a multi-core processor, parallelism comes into play.

Let’s focus on the strategies that apply to single-core processors, which is the case for FastAPI. We’re talking about pre-emptive and cooperative multitasking here. The key difference between the two approaches lies in who’s responsible for managing the order of execution.

In the case of pre-emptive multitasking, this responsibility is handed over to the operating system (OS). Essentially, what this means is that when we write and run a program, the OS can step in and decide to pause it in favor of executing another task.

On the other hand, in the case of cooperative multitasking, it’s the task itself that signals when it’s ready to pause, allowing another task to be executed. This implies that we need to write our code in a way that explicitly indicates when a task can be paused, enabling the task executor to initiate or resume another task.

How is cooperative multitasking implemented in Python?

As mentioned earlier, when working with a single-core processor, we can opt for pre-emptive multitasking or cooperative multitasking. In Python, pre-emptive multitasking is made possible through the Threading module, while the asyncio module facilitates cooperative multitasking. The Threading module enables the creation of threads to carry out tasks concurrently, with the operating system taking charge of managing their execution. Now, let’s deep further into the concept of cooperative multitasking, as it aligns with the principles that underlie FastAPI’s functionality.

In asyncio, there’s the concept of the event loop. The event loop is in charge of controlling how and when each task is executed. So, the event loop knows the state of each task. For simplicity, let’s assume that tasks can have two states: ready and waiting. The ready state means that the task can be executed while the waiting state means that the task is waiting for some external events it depends on to finish. For instance, a database response or writing to a file.

So, in this simplified model, the event loop would have two lists of tasks, the ready and the waiting. When the event loop executes a task, the task is in complete control until it is paused or finished, then leaves the control back to the main loop. When the event loop has the control back, it leaves the task in the proper list and then checks if any task in the waiting list is ready. If there is any task ready, it will take that task and move it to the ready list. After that, it will take the next task available in the ready list and execute it. So, this process will be repeated again and again.

async and await keywords

To support cooperative multitasking with asyncio, Python introduced async and await keywords. The await keyword is used to announce that the task leaves the control to the event loop since it’s waiting for some external event to finish.

The async keyword is used when defining a function to tell Python that such a function will use the await keyword at some point. There are some exceptions to this, such as asynchronous generators. However, this is true for the majority of cases, so no need to worry about it for now.

FastAPI and async

Returning to FastAPI, we mentioned that it’s built on the concept of concurrent programming. In addition, we know that it uses cooperative multitasking. Under the hood, it uses AnyIO which is a layer of extraction of both main Python’s concurrency standard libraries: asyncio (the one we have discussed) and trio. So, when installing AnyIO, we can decide which concurrency library to use, with asyncio being the default one.

Since FastAPI abstracts the use of AnyIO, we don’t need to know how to use AnyIO. Still, it’s essential to understand the concepts of cooperative multitasking because without keeping them in mind, we might run into some awkward behaviors along the way. One common example is what we’re about to see in the following lines.

Building a small project

Let’s get our hands dirty, and build a API with FastAPI to understand how this works. As part of this exercise, we’ll incorporate a typical time-consuming task: writing to a file. It will become evident that if we don’t handle the keywords async and await with care, we could potentially encounter significant performance issues within our API.

We will build the following two endpoints.

- GET /hello: This endpoint will simply return a hello world message.

Expected response: {“message”: “Hello world”}

- GET /write: This endpoint will write some data to a file, and then return the amount of time spent on executing that task.

Expected response: {“waiting_time”: 5.01, “unit”: “seconds”}

Side note: For simplicity, we’re using a GET method here, but POST is the proper HTTP method as we are writing to file.

Setup

Firstly, it’s recommended to create a Python virtual environment. Then, execute the following command to install FastAPI.

~ pip install fastapi

You will also need an ASGI server, such as Uvicorn or Hypercorn. Let’s use Uvicorn as it supports the asyncio library.

~ pip install “uvicorn[standard]”

Implementing our API

We will write a Python file called main.py. Here, we will define our endpoints. Let’s start defining the first endpoint:

# main.py

from fastapi import FastAPI

app = FastAPI()

@app.get("/hello")

def hello():

return {

"message": "Hello world"

}

As we can see, first we need to instantiate a FastAPI application variable, and then we can define our endpoints by using the @app.get(“/hello”) decorator. In this case, we are defining the GET /hello endpoint. Then, we define the behavior of our endpoint inside the hello function. As we discussed, we will be responding with a “Hello world” message.

Now, we can run our API with the following command:

~ uvicorn main:app –reload

This will start a local uvicorn server at port 8000. You can access it by typing in your browser http://127.0.0.1:8000/hello. The –reload flag will make sure that changes in our code are applied to the running API. When accessing the mentioned URL, you will see our Hello World message.

Now, before building the second endpoint, we need to code the logic to write a large amount of data to a file. To do that, we will create a new Python file called write_to_file.py with the following code:

# write_to_file.py from tempfile import TemporaryFile def write_to_file(num_chars: int = 4000000000): content = b"a" * num_chars with TemporaryFile() as f: f.write(content)

The function write_to_file will create a temporary file and write the char “a” many times.

Now, we can create the endpoint GET /write in the main.py file:

# main.py

from app.write_to_file import write_to_file

@app.get("/write")

def write():

start_time = time.time()

write_to_file()

waiting_time = time.time() - start_time

return {"waiting_time": waiting_time,

"unit": "seconds"}

This endpoint will execute the write_to_file function, and then return the time spent to execute it.

So, if we go to http://127.0.0.1:8000/write, we will see that it will take some time to finish and we will get that time in the response.

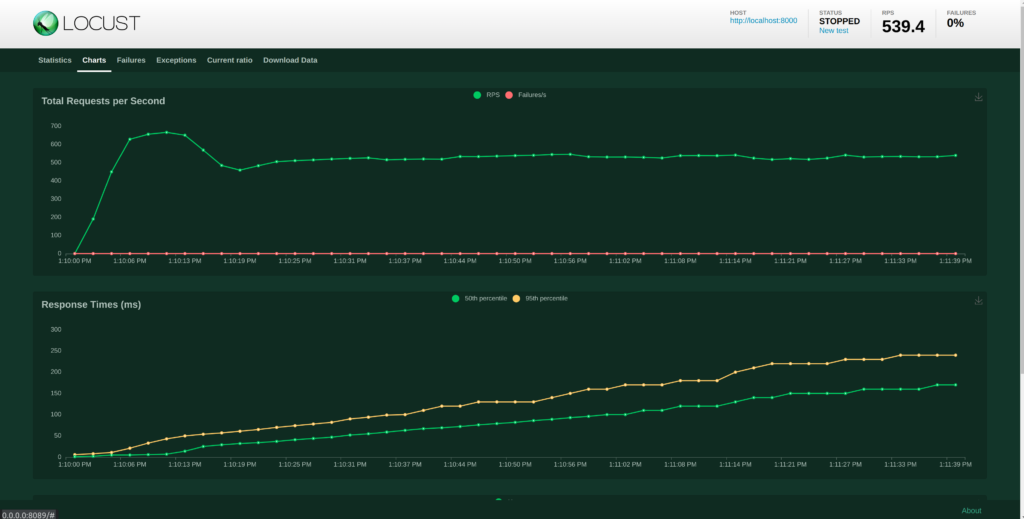

To analyze the performance of this API, we’ve built a performance test with 100 users. The test has been configured to spawn a new user every 1 second. This will be very useful to compare the performance of other implementations of the endpoint to write to a file. So, there will be 100 users accessing the GET /hello and the GET /write endpoint simultaneously. The test will show us the request per second (RPS) as a function of active users.

As we can see here, as the number of users increases, at the beginning it tends to increase the RPS, but at some point, it converges to about 550 RPS.

Where are the async and await keywords?

You may be wondering why in the code above there’s no async or await keywords. However, this is working perfectly. In the performance tests, we’ve seen a reasonable amount of RPS.

So, why is it working? Shouldn’t we need to use the keywords to make it work?

The answer to this is no, as we have already seen. This is because of how FastAPI is implemented under the hood. In the documentation, they explain that when a function endpoint is declared without the async keyword, it’s executed in an external thread pool[1] [2] that is then awaited, instead of being called directly by the event loop.

What does it mean? Recalling from the explanation above about asyncio, the methods declared with async take control of the event loop. So, if a function takes too long to execute and does not announce that it can be awaited by using the await keyword, such as our write_to_file function, it will block the event loop. This means no other operation can be done by the event loop, such as executing other tasks.

Let’s see an example of this. Now, let’s add the async keyword to the function that defines the GET /write endpoint

# main.py

@app.get("/write_bad")

async def write_bad():

start_time = time.time()

write_to_file()

waiting_time = time.time() - start_time

return {"waiting_time": waiting_time,

"unit": "seconds"}

Let’s see the performance test result.

The performance test shows a downgrade of the RPS. In this case, it tends to be 205 RPS. Half of the previous performance test results!

This is because when calling the write function, it takes control of the event loop. Then, it blocks the event loop. Then, it blocks the event loop since the write_to_file function takes some time to finish writing. In the previous example, this write function was not called by the event loop, but by one thread in the thread pool. That was the reason that it wasn’t blocking leading to a good result in the performance test.

But I want to use async and await keywords in my endpoint explicitly

To fix the problem stated above and use async and await, threads and processes can be used. In this case, it’s preferable to use threads because this is an I/O-bounded operation as we are writing to a file.

As we’ve mentioned before, FastAPI uses AnyIO for concurrency. AnyIO has the method to_thread.run_sync() that lets you execute a function in a separate thread and await it. This way, the main thread will not be blocked.

Let’s experiment with it. Now, let’s apply the following changes:

# main.py

from write_to_file import write_to_file

from anyio import to_thread

@app.get("/write_with_thread")

async def write_with_thread():

start_time = time.time()

await to_thread.run_sync(write_to_file)

waiting_time = time.time() - start_time

return {"waiting_time": waiting_time,

"unit": "seconds"}

Let’s check the result of the performance test:

As expected, we’ve recovered performance and we have 540 RPS tendency.

However, this approach is not recommended as it essentially duplicates the functionality already offered by FastAPI. The benefit of using the FastAPI’s version is the reduced code overhead (just don’t use the async keyword in the endpoint function).

So… when is it appropriate to use async and await?

You can use it when you need any concurrent library, for instance, aiofiles. This is a concurrent library for file operations. Let’s write some code that exemplifies this use case. Using this approach is recommended as the aiofiles library is precisely targeted for this case.

Firstly, we need to install aiofiles using the following command:

~ pip install aiofiles

We will be writing to a file as before, but using this library. Let’s create a file called write_to_file_async.py and add the following code:

# write_to_file_async.py

from aiofiles.tempfile import TemporaryFile

async def write_to_file_async(num_chars: int = 4000000000):

content = b"a" * num_chars

async with TemporaryFile() as f:

await f.write(content)

Let’s create a new endpoint called GET /write_async that calls this function:

# main.py

@app.get("/write_async")

async def write_async():

start_time = time.time()

await write_to_file_async()

waiting_time = time.time() - start_time

return {"waiting_time": waiting_time,

"unit": "seconds"}

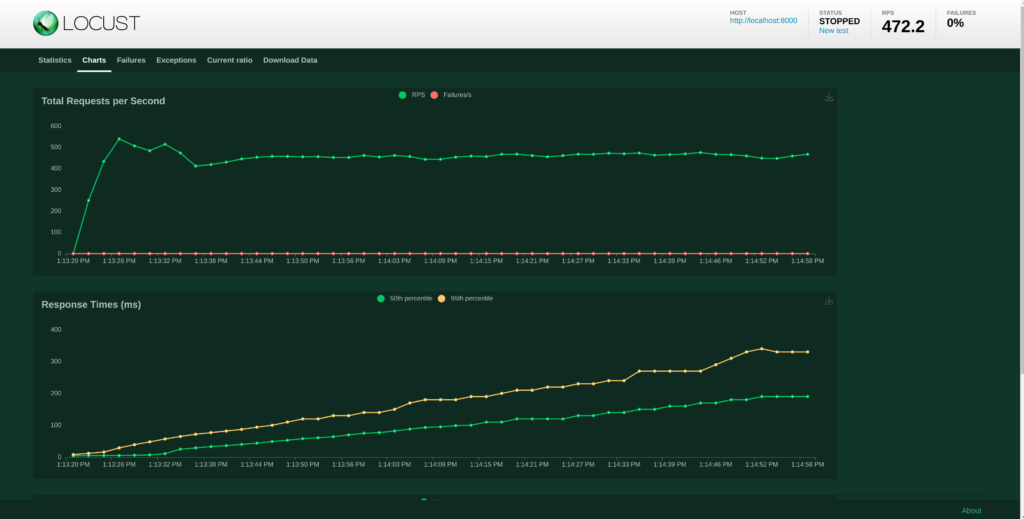

Let’s check the performance results of this approach:

The resulting RPS stands at approximately 470, slightly lower than the initial 550 RPS achieved when using non-concurrent built-in methods for file operations, specifically within the GET /write endpoint.

However, it’s important to note a key distinction between the two cases. While the GET /write endpoint leveraged a thread pool with multiple threads executing the write method, the GET /write_async method operated using a single thread. Surprisingly, this lone thread managed to handle the same throughput, accounting for the observed difference. This shows the capability of FastAPI using concurrency.

Conclusion

FastAPI is a powerful framework that seamlessly integrates with concurrent libraries in Python. However, it’s crucial to handle the async/await keywords with care, especially when we’re not using any concurrent library, as they could potentially affect our API’s performance. To ensure smooth API operations, it’s best to utilize these keywords only when we’re working with asynchronous libraries in our code. So, before diving into the FastAPI, make sure that the engineering team understands the advantages and disadvantages, enabling them to make an informed decision about the best strategy to follow

References

- https://en.wikipedia.org/wiki/Concurrency_(computer_science)

- https://www.teradata.com/Glossary/What-is-Concurrency-Concurrent-Computing#:~:text=Concurrency%20or%20concurrent%20computing%20refers,specific%20applications%20or%20across%20networks

- https://realpython.com/python-concurrency/#:~:text=Take%20the%20Quiz%20%C2%BB-,What%20Is%20Concurrency%3F,instructions%20that%20run%20in%20order

- https://realpython.com/python-concurrency/#asyncio-version

- https://stackoverflow.com/questions/49005651/how-does-asyncio-actually-work/51116910#51116910